The Challenge of Coexisting with AI

Article By Juan Carlos del Rio

AI is not a new phenomenon. Humankind, since its beginnings, has tried to create machines and automata, either to place them at our service or to extend our human capabilities. Many of these stories reflect the admiration for these creations and, at the same time, the fear of losing control over these advanced technologies. We might recall some Greek myths, such as the creation of Talos, the bronze giant killed by Medea, or the ivory statue of Galatea brought to life by Pygmalion. In the Middle Ages there were other legends about the creation of living beings, such as the enigmatic Baphomet of the Templars or the homunculi of Paracelsus. To this, we can add the Jewish traditions about the creation of the Golem, a clay being, who was animated by means of a magic ritual. In modern times we have the monstrous creation of Dr. Victor Frankenstein, in Mary Shelley’s novel, or the wooden boy Pinocchio, created by the carpenter Gepetto in Carlo Collodi’s novel. In both cases, a call is made to the responsibility of science and human action to stay within ethical guidelines, a fundamental element as we shall see later on.

AI is not a new phenomenon. Humankind, since its beginnings, has tried to create machines and automata, either to place them at our service or to extend our human capabilities. Many of these stories reflect the admiration for these creations and, at the same time, the fear of losing control over these advanced technologies. We might recall some Greek myths, such as the creation of Talos, the bronze giant killed by Medea, or the ivory statue of Galatea brought to life by Pygmalion. In the Middle Ages there were other legends about the creation of living beings, such as the enigmatic Baphomet of the Templars or the homunculi of Paracelsus. To this, we can add the Jewish traditions about the creation of the Golem, a clay being, who was animated by means of a magic ritual. In modern times we have the monstrous creation of Dr. Victor Frankenstein, in Mary Shelley’s novel, or the wooden boy Pinocchio, created by the carpenter Gepetto in Carlo Collodi’s novel. In both cases, a call is made to the responsibility of science and human action to stay within ethical guidelines, a fundamental element as we shall see later on.

Other automatons – a word coined by the Greeks – were also invented: artifices or devices that functioned like the mechanism of a clock, appearing to behave autonomously or intelligently. The first “robots” – a term derived from the Czech word for forced labor – emerged in the 20th century. Interestingly, in his Politics [1], Aristotle advocated for the creation of automatons to perform mechanical tasks, as it could lead to the elimination of slavery by rendering it unnecessary.

The development of artificial intelligence (AI) began in the 1950s. Since then, it has aimed to mimic human intelligence, first using algorithms or logical instructions to achieve a specific goal, followed by expert systems that relied on accepted knowledge. As machine learning systems were introduced, the dependence on a limited set of knowledge was eliminated. Neural networks were then developed to handle complex data and non-linear relationships, while artificial vision enabled pattern recognition to imitate human interactions with the environment. Finally, natural language processing was introduced to allow interaction with human beings. Though still not highly advanced, the rapid progress of this technology never ceases to amaze us. It is now possible to create voices, photos, and even videos that simulate human appearance, and in the near future, we may lose the ability to differentiate between fact and AI-generated fiction.

Some of the inventions we’ve discussed have provoked fear, as they possess the potential to be more powerful than us, replace us, or be exploited to dominate the world. In addition, some machines’ close resemblance to humans can be unsettling. These deviations pose a challenge for coexisting with machines.

Ethical issues

We face a number of ethical concerns related to AI, such as:

Algorithmic discrimination. This issue arises due to AI systems being trained on data that may contain cultural and social biases, resulting in discrimination and injustice in domains such as employment, housing, and loans. Numerous instances of algorithmic bias have been documented. [2]

Lack of transparency in algorithms. This issue arises because the decisions made by many AI algorithms are opaque and difficult to understand, making it challenging to audit their use. This is particularly concerning in domains such as healthcare, where AI decisions can have significant implications for individuals’ health and well-being.

Who is responsible when an AI algorithm makes a bad decision? Is it the programmer, the company that implemented it, or the algorithm itself? If we define “taking responsibility” as having a heightened sense of duty or commitment to established norms and assuming the positive or negative consequences of our actions, it becomes apparent that there is a nexus between responsibility and consciousness, so again we have a problem with the actions of AI.

Should autonomous weapons, e.g. drones, be permitted to carry out police or military operations? Allowing this could lead to the escalation of conflicts and the loss of human control over the use of military force. The fantasy tale of the Terminator series could become a reality if a “Skynet”-like system capable of independently controlling the U.S. military arsenal would exist.

It is critical to ensure that human beings retain control and responsibility for decisions that impact their welfare or lives in critical areas such as medicine, social justice, national security, and defense. It is not advisable to grant AI absolute control over its actions.

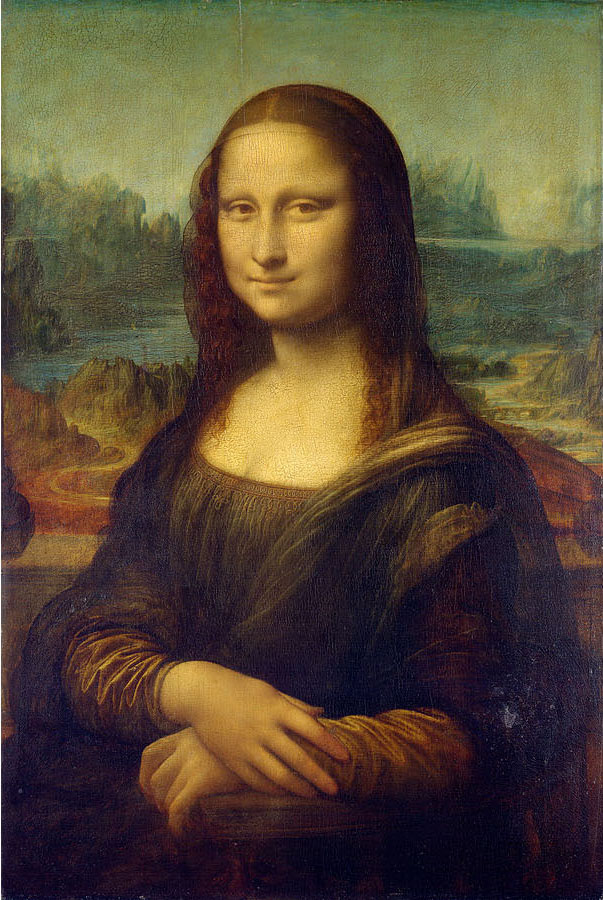

AI is penetrating domains where we believed ourselves unsurpassable. We thought we are the only rational animals. Across many cultures and traditions, the mind has been the defining feature that differentiates us from other animals. However, machines have progressively emulated and surpassed human abilities, and it is no longer just strength or physical capabilities. Initially, it was mathematical calculations, and today, it is common knowledge that a basic calculator or our smartphones can complete a ten-digit division faster than us. Next, memory: a small chip can now store more books than the famous Library of Alexandria. AI has also demonstrated remarkable pattern recognition abilities, which enable it to quickly recognize similarities and differences, for example, for better spatial orientation when handling a map. Recently, AI has achieved breakthroughs in natural language processing, demonstrating the ability to translate, summarize, or write texts more proficiently than humans. In addition, we are discovering the power of AI in the creation of images and their artistic capabilities, artistic capabilities of AI in the creation of images, music, and poetry by learning from previous styles and combining elements of their training to generate novel outputs, just like we do.

Human Intelligence

Critics of AI say that its use prevents us from developing some human mental capacities, such as reading, reflection, memory, writing, etc. We must admit that the mind is a fundamental element in the development of the human being. In esoteric traditions it is suggested that the “divine spark” or mental spark is essential to human nature, and that the task of the human being is to discover and cultivate this talent through spiritual practice and the search for truth. But machines have no such concepts of purpose or transcendence. If we lose our mental aptitudes, we could become “less human”.

We have long considered ourselves to be the only intelligent beings, as animals, although they may exhibit intelligent behavior, are not aware of it. This is the fundamental difference between us and other creatures. While AI machines may be highly intelligent or even surpass us in some areas, they lack self-awareness and the ability to make independent decisions. They are tools designed to help humans achieve specific objectives, but they do not possess an understanding of themselves or the world around them. AI is not a replacement for humans, but rather an extension of our abilities through highly advanced programming. It is not a form of human knowledge, as the human mind is not a computer.

People are amazed by the intelligent capabilities of AI is because they focus on abstraction and reasoning, while ignoring other human aspects such as emotional or social intelligence. In this sense, it could be argued that machines are not truly intelligent if we define intelligence as the ability to choose between different options or situations, understand them, and synthesize information to make the best decision. Human beings possess creativity, imagination, empathy, critical thinking, curiosity, and passion, elements that AI does not possess.

AI processes information through a set of logical and mathematical instructions. It is deterministic and cannot make autonomous or creative decisions beyond what it was programmed to. Humans learn through subjective experiences and exploration, but AI only uses mathematical and statistical patterns. Furthermore, machines have no intentions or purposes; they can only operate based on instructions given to them. They do not have goals or desires like human beings.

The misuse of AI in society can have negative consequences. For example, in the case of Cambridge Analytica and Facebook, AI was used to manipulate the outcome of elections and referendums. Additionally, certain AI-powered search engines have become a “truth machine,” influencing the way people perceive and understand information. The implementation of China’s Social Credit System is also concerning. This AI system evaluates and monitors citizens’ and businesses’ behavior in various aspects of daily life, including finance, education, security, health, and morality, and awards points accordingly. These points can be used to obtain benefits, such as access to public services, loans, employment, and travel.

And yet, despite our misgivings, we must acknowledge that AI’s objectivity, ability to process vast amounts of data, and consider numerous factors in decision making could greatly assist in organizing society. AI systems, with their superior intelligence, rationality, and lack of subjectivity and prejudice, could potentially be fairer than humans and even participate in governing our society. They could also lead to safer transportation with fewer accidents than human drivers.

Moreover, because they are always available and can communicate with us, perhaps they could be our companions, at least for the hundreds of millions of elderly people who live in solitude in this dehumanized world. This is already happening in Japan, for example. Curiously, the excessive technologization of society has turned us into more isolated, more solitary beings, and yet the remedy could be precisely to implement more technology.

In conclusion, we need a “friendly AI [4]” which considers the long-term consequences of AI actions and decisions. The goal would be to create systems that are not only efficient, but also safe and beneficial to society. To this end, we need to design them with ethical values and to be able to learn and adapt as they are used. And in addition, we should urge governments to collaborate with the scientific community on legislation that protects individual rights and sets criminal limits on the misuse of AI. This will require companies to sit down with institutions and governments, psychologists, philosophers, and human rights organizations to ensure that all aspects of this technology have been considered.

[1] “For if every instrument could accomplish its own work, obeying or anticipating the will of others, like the statues of Daedalus, or the tripods of Hephaestus, which, says the poet, “of their own accord entered the assembly of the Gods;” if, in like manner, the shuttle would weave and the plectrum touch the lyre without a hand to guide them, chief workmen would not want servants, nor masters slaves.” Politics, Ch. II “On Slavery.”

[2] In books with such clear titles as Weapons of Mathematical Destruction: How Big Data Increases Inequality and Threatens Democracy, by Cathy O’Neil; Algorithms of Oppression: How Search Engines Reinforce Racism, by Safiya Umoja Noble; or Data vs Democracy: How Big Data algorithms shape our opinions and alter the course of history, by Kris Shaffer.

[3] Other interesting books are Google and the Myth of Universal Knowledge, by Jean-Noël Jeanneney, or Googled: The End of the World as We Knew it, by Ken Auletta.

[4] We use the term coined by Eliezer Yudkowsky. You can read about it at https://www.kurzweilai.net/what-is-friendly-ai.

The entity posting this article assumes the responsibility that images used in this article have the requisite permissionsImage References

Pexels - Tara Whitestead

Read the original article on https://www.revistaesfinge.com/wp-content/uploads/revista/Esfinge-2023-05.pdf

Permissions required for the publishing of this article have been obtained

Article References

Translated from Spanish. The article was published first in 'Revista Esfinge' in May 2023.

“AI is not a replacement for humans, but rather an extension of our abilities through highly advanced programming. It is not a form of human knowledge, as the human mind is not a computer.”

I appreciate that you mention AI being an “extension of our abilities”

But it’s also true that every invention that mankind has made is an extension of our abilities.

– hammer to help humans build

– knives, guns to help humans cut, kill

– books to help us record, entertain, etc

If AI is just another invention, then it is simply an extension of us, why is it so feared? Maybe feared is the wrong word, but the concern is relevant. I consider there to be a fine line that humans should recognize and that’s the point when AI starts recognizing themselves, recognizing their own existence. Will that ever happen?

Right now, it seems that humans are more worried about the influence of AI, This worry seems very similar to when books were invented, radio started, or tv/movies became a hit. That content became real, and mankind absorbed it and obsessed over it. I think that’s what we are really worried about – when AI content becomes way too real.

This was fun, I appreciate your thoughts.