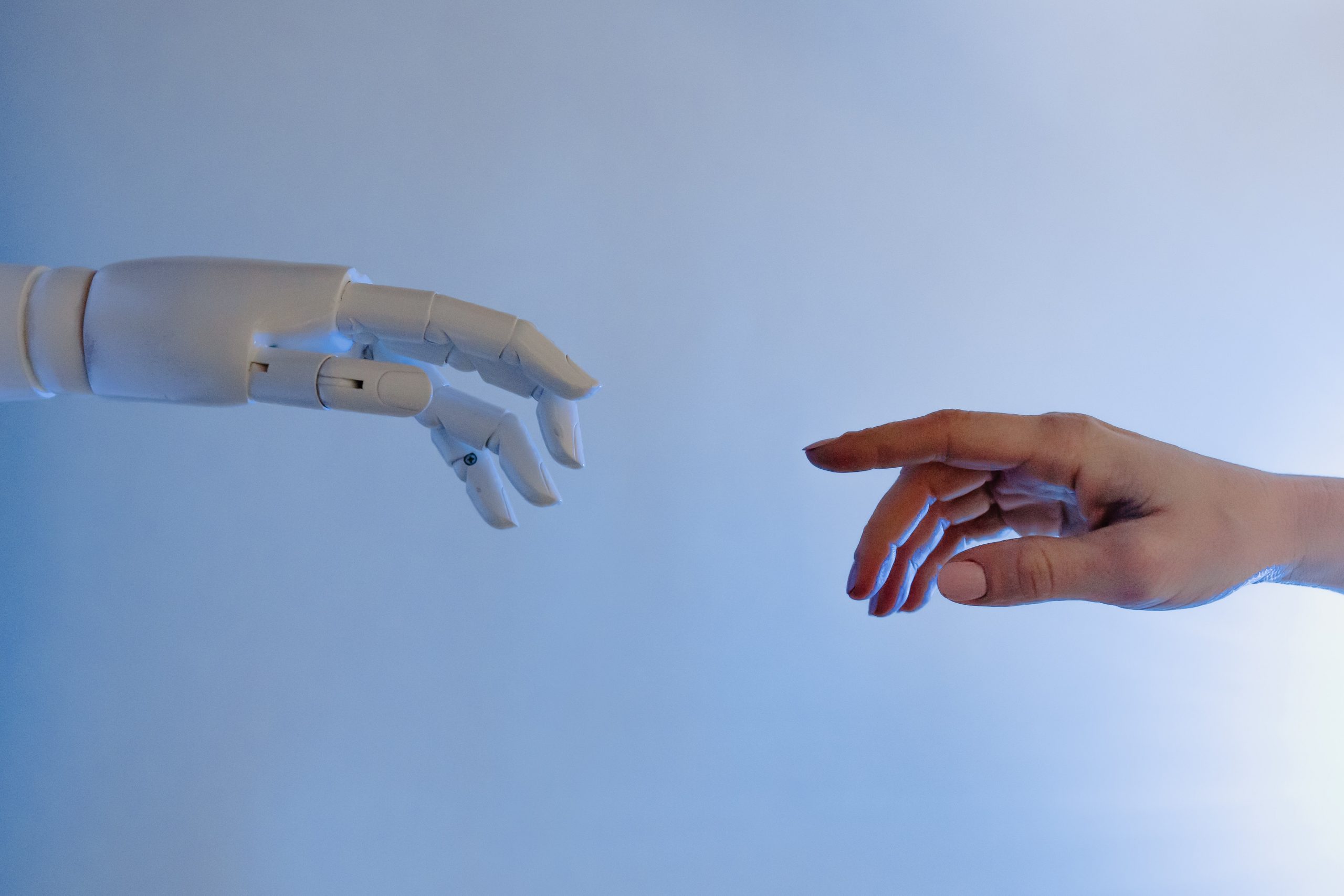

The Rise of the Machines?

Article By Peter Fox

The principle of the programmable modern computer was proposed by Alan Turing in a paper in 1936, in which he proved that a “Universal Computing Machine” could be capable of computing anything that is computable by executing instructions using a program stored on tape. Since then, the astonishing development of computing hardware has allowed us to transition from tape to transistors, of which there are literally billions being carried around in our pockets. This development of hardware has made it possible to store significantly more complex programs, which can execute thousands of trillions of calculations per second. The programs have now reached the point that they can understand and perceive their environment and take actions to ensure that they reach their goals. This is known as Artificial Intelligence or AI.

The principle of the programmable modern computer was proposed by Alan Turing in a paper in 1936, in which he proved that a “Universal Computing Machine” could be capable of computing anything that is computable by executing instructions using a program stored on tape. Since then, the astonishing development of computing hardware has allowed us to transition from tape to transistors, of which there are literally billions being carried around in our pockets. This development of hardware has made it possible to store significantly more complex programs, which can execute thousands of trillions of calculations per second. The programs have now reached the point that they can understand and perceive their environment and take actions to ensure that they reach their goals. This is known as Artificial Intelligence or AI.

Hollywood has for some time played with the idea of an AI which destroys or enslaves humanity in a dramatic fashion. However, the reality of that happening is much smaller than Hollywood directors and script writers would like us to believe. That is not to say that we shouldn’t proceed with caution when developing and using AI. The use of AI is going to be widespread, with it being applied to cars, trucks, manufacturing machines, and inside computers, smartphones and potentially military technology. It is perhaps in these areas where we have a more immediate need to be cautious.

AI will provide safer trucks and cars, which can reach their destinations faster; something which is naturally beneficial to society, but there will be an impact on those industries in terms of jobs, which could constitute a threat to human dignity. Furthermore, it could replace nurses, soldiers and police officers, roles which require empathy, and without it the consequences could be quite significant. Furthermore, the amount of information an AI can learn about us could give too much power to corporations and governments.

Military technology is the most dangerous area which can be influenced by AI. Very recently, the Korea Institute of Science and Technology (KAIST) celebrated its new research centre for the Convergence of National Defence and Artificial Intelligence. 57 scientists subsequently called for a boycott of the centre, due to the fear it will be used to create autonomous weapon systems.

However, there is a certain level of inevitability about the development of artificial intelligence. It is now perhaps a case of ensuring that those with the ability to develop and fund research into AI do this with something we should all be striving for in ourselves: wisdom. Ensuring that the machines we create have the correct fail-safe mechanisms to ensure our own protection is important, and the initial designers should have the correct motivation when designing the AI.

Many modern philosophers and scientists, including the late Prof. Hawking, have considered the prospect of our own self-destruction through the creation of an AI. However, this far-off doomsday scenario should not distract from the immediate social and ethical issues of AI. Despite the dangers of AI, it should also be considered for its potential to assist humanity with many of the issues we face, such as disease and poverty.

.

Image Credits: By Sujins | Pixabay | CC0

The entity posting this article assumes the responsibility that images used in this article have the requisite permissionsImage References

By Sujins | Pixabay | CC0

Permissions required for the publishing of this article have been obtained

Like an infant accidently touching a gun trigger and the bullet accidentally striking someone down, a computer is in itself, innocent. Unless of course, it was pre-loaded with malevolent data – in which case the coder is guilty.

As more and more human attributes are fed into computer brains, they’ll become more and more human-like. With infinite raw data going in, computers could one day become ‘wise.’

Scary thing is that computers have human brains guiding them.

Sure, there could be random cross-overs in computer engines, and accidental juxtapositions of intent.

But that’s a risk technology needs to safeguard against.

Will man always be in the driver’s seat, or one day will the cyber world go out of control?

Will computers become so smart that they start programing themselves?

If the cyber world goes out of whack, there could be a pre-designed Plan B to tackle it.

If humans continue to have control, then there’s a problem.

Considering all the things that computers can do that man can’t, I’m all for maximising the capability of computers.

Computers taking over from man? Aww, that should be fun. : )